I thought it would be interesting to do some image generation with ChatGPT, so I started using the Dall-E APIs that ChatGPT have provided.

This configuration allows you to generate images via script and attach them to a record.

To do this, firstly you will need to run through this article to get a basic ChatGPT configuration on your environment.

Once you have got that setup, you are ready to continue. Firstly, we need to add a new REST message endpoint. Open the “ChatGPT” REST message and create a new HTTP method.

| Name | Image |

| Http Method | POST |

| Endpoint | https://api.openai.com/v1/images/generations |

Next, we are going to create a brand new script include. This extends from the ChatGPT script include that was created in the initial configuration. Create a new script include with the following details:

| Name | ChatGPTImageProcessing |

| Description | Provides Dall-E API configuration. |

var ChatGPTImageProcessing = Class.create();

ChatGPTImageProcessing.prototype = Object.extendsObject(global.ChatGPT, {

initialize: function() {},

createImage: function(requested_image_text) {

try {

//this.logDebug("Submitting chat messages: " + JSON.stringify(messages));

var request = new sn_ws.RESTMessageV2("ChatGPT", "Image");

var payload = {

//"model": "dall-e-3",

"model": "dall-e-2",

"prompt": requested_image_text,

"response_format": "b64_json"

};

this.logDebug("Payload: " + JSON.stringify(payload));

request.setRequestBody(JSON.stringify(payload));

request.setRequestHeader("Content-Type", "application/json");

var response = request.execute();

var httpResponseStatus = response.getStatusCode();

var httpResponseContentType = response.getHeader('Content-Type');

if (httpResponseStatus === 200 && httpResponseContentType === 'application/json') {

this.logDebug("ChatGPT Imaging API call was successful");

this.logDebug("ChatGPT Response was: " + response.getBody());

// Get the base64 response and return it

var parseResponse = JSON.parse(response.getBody());

var base64Response = parseResponse.data[0].b64_json;

return base64Response;

} else {

gs.error('Error calling the ChatGPT API. HTTP Status: ' + httpResponseStatus + " - body is " + response.getBody(), "ChatGPTImageProcessing");

}

} catch (ex) {

var exception_message = ex.getMessage();

gs.error(exception_message, "ChatGPTImageProcessing");

}

},

addImageAsAttachment: function(record, chatGPTResponse, fileName) {

// Make sure the filename has .png at the end

fileName = fileName.contains(".png") ? fileName : fileName + ".png";

// The image API responds using either a URL or base64. We will use base64 as we can use that to attach it.

var base64Bytes = GlideStringUtil.base64DecodeAsBytes(chatGPTResponse);

var gsa = new GlideSysAttachment();

var attachmentId = gsa.write(record, fileName, 'image/png', base64Bytes); // Write the attachment to the record.

gs.print('Attachment created successfully: ' + attachmentId);

},

type: 'ChatGPTImageProcessing'

});

There are two functions here – firstly the createImage function which generates the image into base64 code. Secondly, the addImageAsAttachment function which will add the newly generated image to the GlideRecord you provide.

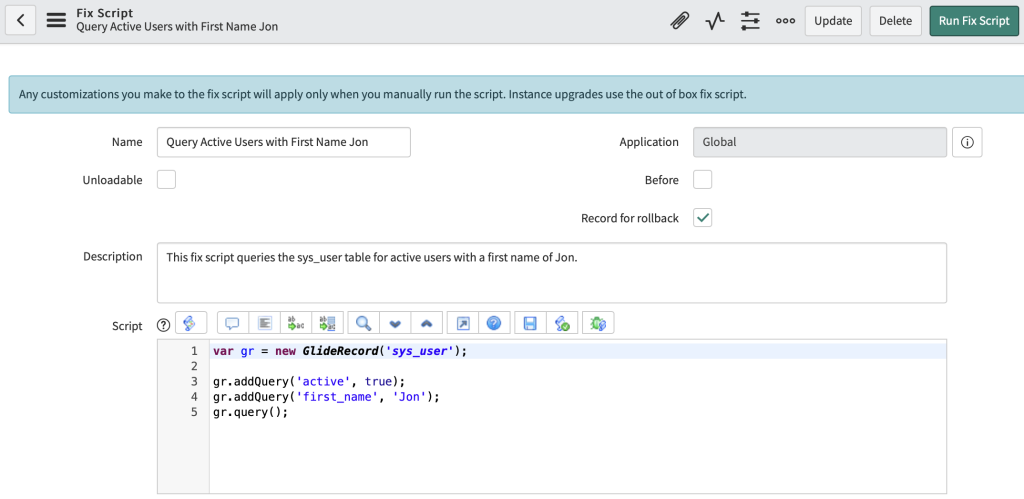

To test this, run the below fix script. It should add a new image called “cartoon_cat.png” to the fix script.

| Name | ChatGPTImageProcessing Test |

| Description | Testing ChatGPT image processing |

var fix_script = new GlideRecord('sys_script_fix');

if (fix_script.get('9f0dea4193400210d6f7fbf08bba10d4')) {

var si = new global.ChatGPTImageProcessing();

var image = si.createImage("Create an image of a fluffy cartoon cat that is wearing sunglasses");

// Attach the image

si.addImageAsAttachment(fix_script, image, "cartoon_cat.png");

}

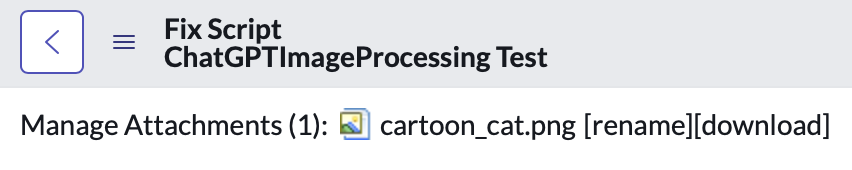

If everything goes well, it should attach a file (you might need to refresh the script after running it to see the attachment):

The image it made me was this! I thought it was pretty cool.